I’ve recently had the opportunity to design and implement this topology with a customer. If you have any experience deploying FortiGate firewalls in Azure, you may appreciate how excellent Azure Route Server is. If you already have Azure ExpressRoute (ER), or you feel it aligns with your needs better than a VPN, the logical choice would be to use that for a backhaul connection to your on-premises network.

However, this topology did come with some unexpected challenges, so I thought it would be worth documenting them because I found very little useful information online to help get it working.

Why should you choose this topology?

ER in Azure is like a WAN connection directly to your virtual network. It provides secure, private connectivity with guaranteed, symmetrical bandwidth to and from your on-premises and cloud networks.

Azure Route Server provides a great deal of functionality straight out of the box. Most notably of which is it allows you to eliminate an Internal Load Balancer (ILB) from your HA firewall design, allowing the use of multiple VDOMS on your FortiGate firewalls. In addition to this, it eliminates the need to manage UDR’s on all your subnets to ensure your traffic is being routed via your firewalls for inspection (which makes life so much easier if you have many people with deploy permissions in your Azure environment).

What issues will you face with this architecture?

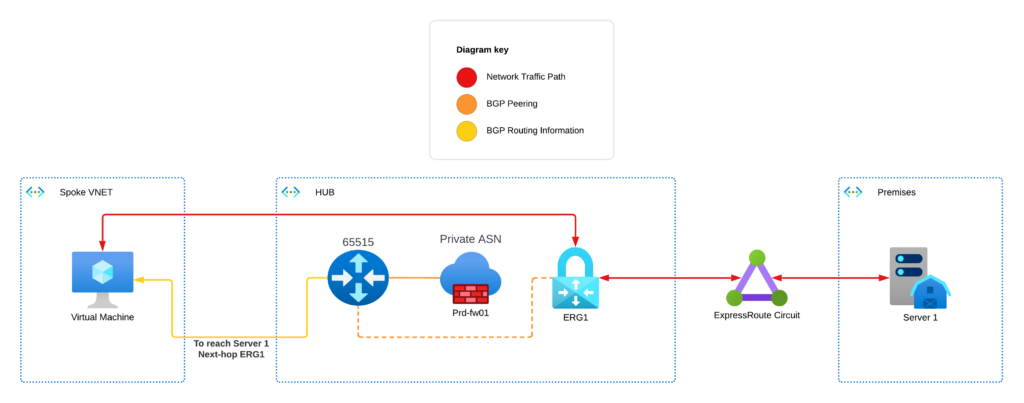

If (like me) you think that by deploying your firewalls, ER gateway and route server in one hub VNET will get everything working (you’re mistaken). This topology will result in your route server learning BGP routes from your ER gateway and advertising them directly to the spoke VNETS. This means that the next-hop for all traffic destined on-premises is going to be the ER gateway, and vice-versa all traffic inbound from the ER will have a next-hop of your destination resource. This means that your firewall is inspecting no traffic and you’ll be paying a lot of money for a FortiGate firewall solution that does nothing all day.

You may think that the simple solution is to apply User Defined Routes (UDRs) everywhere and get rid of the route server. This can be an answer, but it poses many challenges. Your ER gateway will be learning routes to all your VNET ranges. If you try to apply a summary route that includes all your Azure IP ranges, (192.168.100.0/16 for example) the routes that your gateway is learning will have a longer prefix (192.168.100.0/24, 192.168.100.1/24, etc.). Longest Prefix Matching (LPM) will mean that the SDN will prefer those more specific routes and bypass your FortiGate. To overcome this, you’ll need to maintain a route table that has an equal or more specific prefix for each of your connected spoke networks with a next-hop of the FortiGate, which brings us to the second major issue. If you use UDR’s and you have HA FortiGates, you’ll need to have an ILB so that your spoke VNETS can have a single point to be a valid next-hop. Which as I alluded to above, means you can no longer use VDOMs.

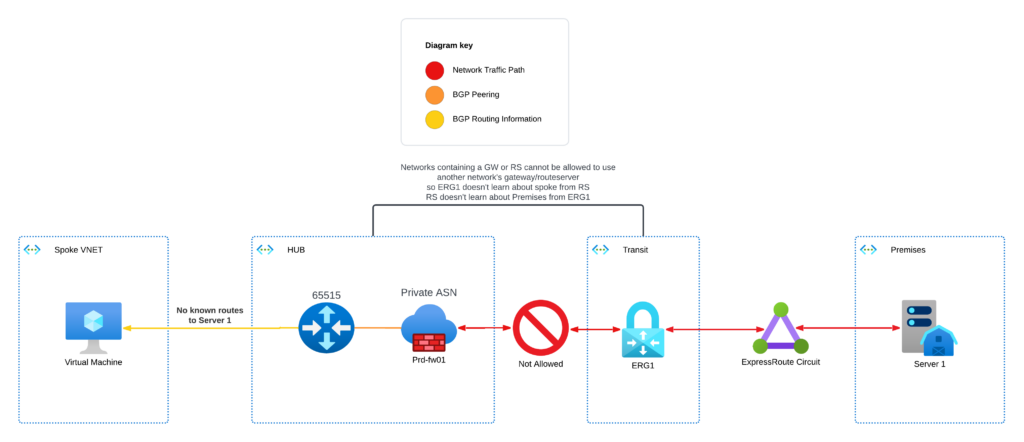

So fine, let’s put the ER in a transit network, peer it to the hub and treat on-premises like another spoke network. That way because the gateway isn’t in the hub VNET, spokes must send traffic destined for it to the hub and the firewall will forward it onto the gateway in the transit spoke. Unfortunately, this permutation doesn’t work because of how you will be forced to configure the VNET peering relationship.

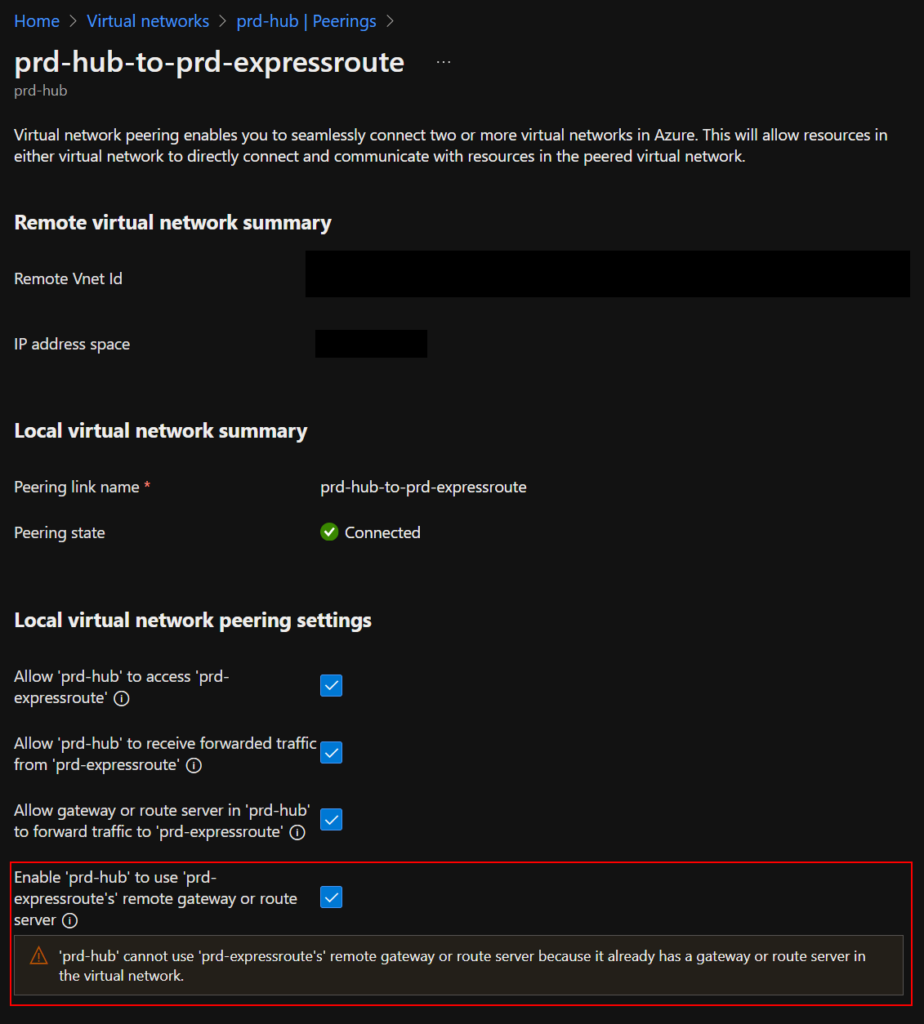

The above topology will lead you to configure your virtual network peering like the image below. However, as you can see, I am unable to allow resources in my hub network to use the ER gateway in the transit network. ARGH! (╯°□°)╯︵ ┻━┻

Not only does this prevent the route server and ER gateway from communicating, but it also prevents the FortiGate from forwarding traffic to the ER.

At this point, I felt defeated. I was willing to give up on this design and go back to suffering through UDR’s everywhere. I went home and spent a sleepless night murmuring about BGP and came back in the next day ready to find a way to make this work.

Final Solution! (Or so I thought…)

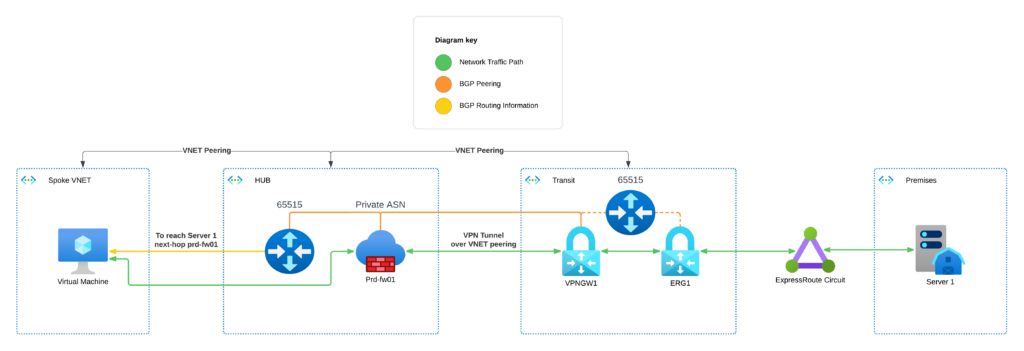

After hours of reading Microsoft documentation about how ARS works and what options are configurable, I landed on the below topology. Before I talk about why this is the answer, I’ll mention one final issue that I had to overcome to get this working.

As you can see above, we now have 2 route servers in the final topology. This presents the final challenge I faced and has to do with how BGP messages are routed. As you can see, both the route servers in this diagram have the ASN 65515. This is NOT configurable, definitely the “gotcha” that will catch people out the most when you need to use more than one. When the hub route server learns about the route to the Spoke VNET and advertises it to the FortiGate, it will add the ASN 65515 to the AS path. When the FortiGate advertises this route to the transit VNET, the route server there will see the AS path, and see its own ASN, 65515 and assume that a routing loop has occurred and drop the message. This is a designed feature of BGP to prevent routing loops from occurring because of the Dynamic nature of BGP. To get around this, we’ll need to set an AS override policy on the FortiGate when importing routes from the route server to override the ASN of 65515 to whatever private ASN the firewalls are advertising routes on.

So, this is fairly complicated. Why do we need all this stuff just to use route server with ER? The main problems we face are that ARS and ER have an implicit, managed BGP connection when they’re deployed in the same VNET together. ARS will learn routes from the ER and advertise them to all the attached spoke VNETs, meaning that the firewall will be totally bypassed without the use of UDRs to overwrite them. Due to limitations with the Azure network fabric, we can’t put the ER gateway in a transit network and rely on the transit and spoke networks being on either side of the hub to separate them. This topology ensures that your hub network only learns about on-premises routes via the FortiGate NVA, and your on-premises network only learns about Azure networks via the same mechanism.

How could we improve?

After working through all this, I realised that there’s a better way to implement this collection of resources to work together.

Funny how sometimes in life you need to go full circle just to realise you’ve arrived back where you were at the start! Only this time you’re a bit older and a bit wiser…

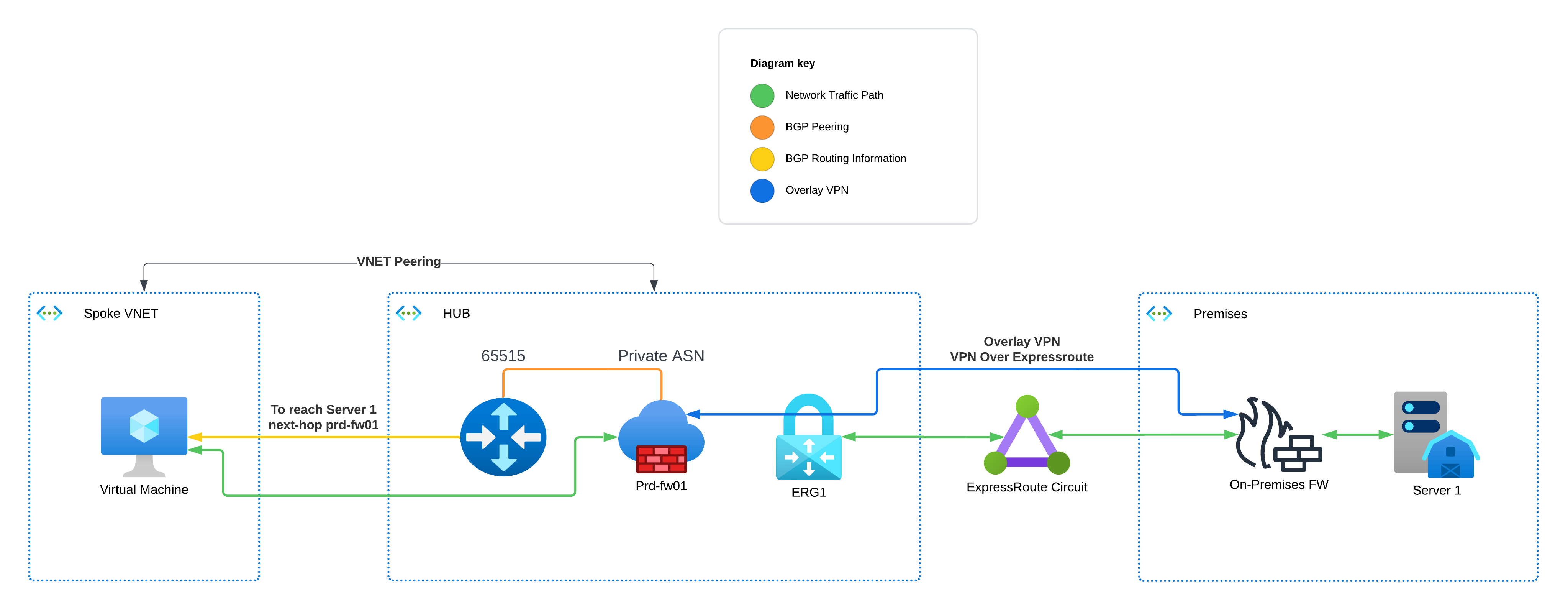

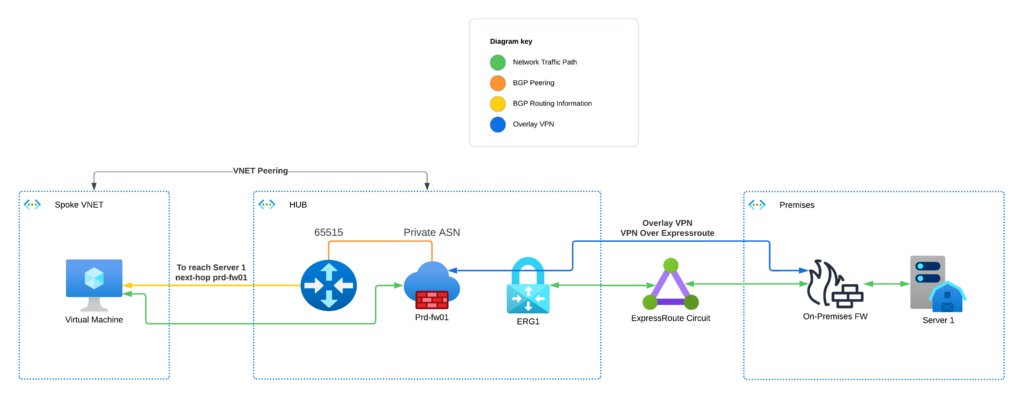

In this topology, you ditch the transit VNET, place the ER gateway in the hub VNET, and establish an IPSEC VPN overlay on top of the ER connection between your premises and Azure. You need to be careful which routes you advertise to your ER peering on-premises. You only want to advertise enough to establish the IPSEC tunnel, and then send BGP routes for your other ranges over the tunnel. This way, the route server can’t learn about your on-premises ranges from the ER gateway, and therefore must route traffic via your FortiGate for inspection and policy evaluation. Likewise, your on-premises firewall must route traffic destined for your Azure workloads via the tunnel that terminates on your FortiGate, so inbound traffic will be inspected as well.

The other benefit of this topology is that if you use FortiAnalyzer or FortiManager, or any of the other Forti-products on-premises. You can advertise routes to those range(s) via the ER and use an NSG on the gateway subnet to ensure only your FortiGate management interfaces can connect to them over the ER.

Thanks for reading this far! Building this solution was a great adventure and taught me heaps about Azure networking—I hope you picked up some useful insights too. If you’re planning your own Azure FortiGate deployment, our Fortinet-certified team at Codify can help. With extensive experience across Azure and on-premises networking, we’ll ensure you get the right solution tailored to your needs. Get in touch!

For more FortiGate tips and best practices, check out our other posts…

FortiGate vs. Azure Firewall: How to Choose the Best Firewall for Microsoft Azure Security – Codify

FortiGate Firewall as a Service for Azure

Codify FortiGate’s Bundle: Comprehensive, secure, and scalable next-generation firewall solutions for your Azure Cloud.

FortiGate Firewall as a Service for Azure | Codify Cloud Security AU